Over the years I sketched some notes about dealing with Pursuit in the new registry world, so what follows is a rough chop of those tailored to this thread. As the quote goes, I apologise for the long letter as I didn’t have the leisure to make it shorter

Background on Pursuit V1

Pursuit is currently the home of all PureScript documentation, and an informal registry - if you can find a package there, then you can probably use it in your project.

Traditionally you would get your documentation in there by calling pulp publish, which:

- cut a git tag in your repo

- collected some info from Bower to pass to the compiler

- called

purs publish with those, to get the compiler to gather all the docs in the project, generate docs.json files and a resolution of your package versions/addresses

- packaged all of that up, and hit Pursuit’s API with the result

- at that point Pursuit would call the compiler itself with a custom renderer to generate HTML docs (from the

docs.json files), and cross-link them to the GitHub repos (through the resolution file), to get nice source links

- That’s is, your docs are published, and Pursuit will serve them itself

A vision for Pursuit V2

Pursuit V1 is based on the requirements of a PureScript registry that relies on Bower. The new Registry changes some of those requirements (e.g. no Bower, support for monorepos, git hosting through other providers outside GitHub, etc) and that either requires patching Pursuit V1 or using something else to render the docs.

At this point it seems fairly clear to me that both the core team and the community do not have much appetite to maintain a webservice written in Haskell, so new thing it is - this gives us the opportunity to rethink the current model in the wake of the new Registry.

What do we expect from something like Pursuit?

Currently:

- host compiler-generated docs, patched with links to the original source, and the package READMEs

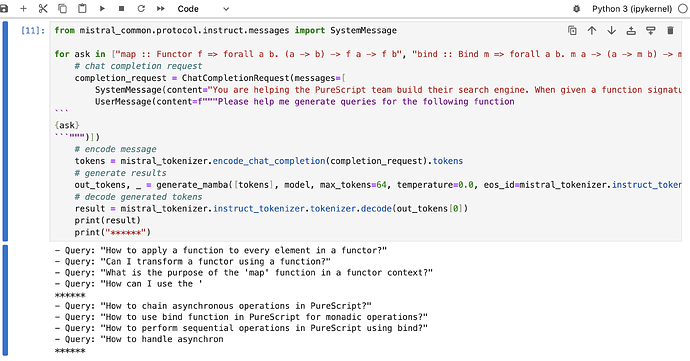

- search for packages, functions, and types

Now, if we are going to ditch Pursuit V1 entirely, I question the need for a webserver in the first place: compiler-generated docs are entirely static files, READMEs are as well, and wonderful languages such as PureScript allow us to implement client-side search, if the search index can be broken up in small enough chunks. These index chunks are static files as well!

Pursuit can then be entirely hosted on an S3-like service, alongside the packages for the Registry. (which we host on Digital Ocean’s S3 thing called Spaces)

This line of thinking is actually much older than the new Registry, as Spago integrates a Pursuit-approximation since 2019 (gosh that was 5y ago, time flies): spago docs will generate docs from the compiler, build a sharded search index over the whole package set, and add a client-side app to let your search these docs.

This almost covers the entirety of the requirement list from above:

- we get compiler-generated docs, but they don’t have links to GitHub nor READMEs for the packages

- the type-search is roughly on-par with Pursuit

Point (1) above is where the whole purs publish issue ties in: as detailed in the registry ticket and the gist that Thomas linked above, it’s a piece of the compiler that fills in information about re-exports, while at the same time adding restrictions (clean git tree, etc).

We are currently calling purs publish in the registry pipeline, to upload docs to pursuit, but for allowing things like monorepo publishing, we should either patch the current purs publish (and hopefully not break the current Pursuit) or go around it - in the gist above I propose that we patch purs docs to add the information that we need instead, but today I believe that we should just patch purs publish to remove all restrictions.

Once we have this functionality then the Registry pipeline - i.e. the batch job that is kicked off on our infra when you call spago publish - can call purs docs, patch the html to reference GitHub locations, generate the index, and upload index+html to S3.

This is the bare minimum that would get us to parity with Pursuit, but once things are integrated with the Registry pipeline, then we can easily add info about package sets, compiler versions, the registry index, etc, since we are dealing with that info in there anyways.

The path to Pursuit V2

I want to stress that most of the pieces are already there thanks to docs-search. Things that are missing:

- patch

purs docs to add reExports (see the gist linked above), or purs publish to remove the git restrictions

- patch the generated docs to have README and GitHub/other-hosting-providers source links

As with every approach, there are risk factors that are not quantified at the moment (these are known unknowns. Unknown unknowns are not listed for obvious reasons):

-

docs-search works off a whole package set to generate its index, and does not need to worry about incremental updates. The Registry pipeline will need to worry about updating the index in an efficient way, in order to avoid trashing the cache (i.e. unstable sharding, where a new package triggers the regeneration of many chunks. I don’t have a clear view of what the chunking logic will do in this case, but I do believe it’s possible and maybe even easy to ensure that the sharding of the index is stable).

- for the client side search to work well on poor connections the shards can’t be too big nor too many, or the client will have to download a ton of data, which is not always possible/pleasant. We’ll need to benchmark that. However, it’s an easy one to solve in case we can’t shrink the index to an acceptable size: we’d have a webserver answer the search query, that is just a thin thin layer as it would be a “local client”: i.e. it would download the whole index locally and answer queries from the internet with the local index. This would still be an improvement over the current situation, as the rest of the assets would still be static files, with just the search being server-based.